Transatlantic-o

From Consent to Command: Agentic AI & Open Banking Universal Data Access Framework

When AI agents begin executing financial tasks on our behalf, how do we ensure security and proper consent?

I propose merging Open Banking's consent architecture with AI protocols like MCP to create a Universal Data Access Framework—enabling AI assistants to act on our behalf while maintaining strict permission controls.

The future of financial services lies at this intersection, where data-rich consumers can leverage AI agents to make better decisions with less friction.

For those building the next generation of fintech and AI tools: we require standardized consent flows that work for both humans and their AI agents.

In this new era – the digital economy; data is precious as metal. At times, compared to a new form of gold, something true in financial services. A sector "information rich" and reliable on data for (all) decision making.

As we transition into the next iteration of the digitalisation journey, which some call the era of Web 3.0, we are seeing the digital landscape rapidly evolving. Empowering consumers to leverage their data, that provides context for better decision-making, is at the centre of this new transition.

In this interconnected world we live in, security is paramount and serves as the foundational pillar for trust. To harness the full potential of this digital transformation, data needs to be accessed in a permissioned manner with interoperability and standardization key for the full benefits unlock.

Enter the AI Agent – the efficient trusted partner, who will mine, administer and spend the gold on consumers' behalf!

In the fast transition from "boxed pre-digital" to "digitally native" where everyone and everything is becoming connected, we have become accustomed to the read and write aspects of the network economy. Today, with the introductions of AI agents, we are transitioning to the command to own stage via APIs and tokens. We are moving into a world where agents act on our behalf speeding us away from the long-gone era of Web 1.0 where we scourged for links.

In order to live to the promise of a world where data serves consumers enhanced by AI agents, we need coordinated stakeholders, included the agents and their operators. Guaranteeing consent-based, secure and seamless access to data requires orchestrating them through adequate frameworks than can leverage already developed capabilities.

Through experience helping shape open finance and open data ecosystems, I have had the privilege to see how coordinated ecosystem development enables the creation of the right tooling and guardrails that allow consumers to see the benefits of the digital economy through trust. This is the building principle all stakeholders need to embrace.

Success will allow consumers to capture the benefits of context their footprints (digital) will provide.

But how do we achieve this? The recently introduced model-context protocol novel approximation needs to be enhanced by merging its capabilities with open finance and open data frameworks for secure, standardized, interoperable sharing of data through a consent-based approach.

MCP meets Open Finance and Open Data

The integration of Open Banking and Open Finance frameworks with the Model Context Protocol (MCP) developed by Anthropic, “a new standard for connecting AI assistants to the systems where data lives”, presents a great opportunity to develop a unified standard for secure, permissioned, and interoperable data access.

Open Banking and Open Finance have been revolutionizing the financial sector for the past years by enabling standardized and secure data sharing between financial institutions and third-party providers, empowering consumers with greater control over their financial information. Meanwhile, the powerful capabilities LLMs have brought, give humans and organizations the capacity to be enhanced using data in a contextualized way for decision making.

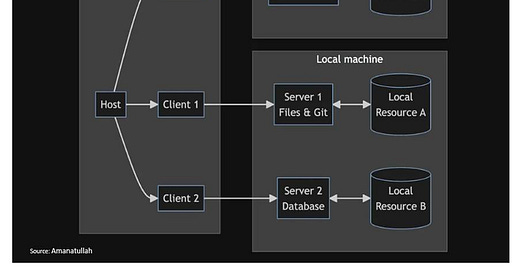

MCP was developed to give rich capabilities to agentic AI, offering a robust architecture for connecting AI systems with diverse data sources, enhancing context awareness and relevance.

As with all things data, integrations are one of the biggest challenges for developers. Imagine this challenge multiplied to the n and you find custom integrations for every new tool and dataset, not only complex, but expensive. For this reason, thinking about a universal connector seems natural.

A breakthrough arrived in November last year, when Anthropic, founded by Dario Amodei and Daniela Amodei in 2021, open-sourced what they called the Model-Context Protocol, "a new standard for connecting AI assistants to the systems where data lives, including content repositories, business tools, and development environments". The objectives of MCP is to tackle the challenges of information (data) silos by developing an open standard for connecting AI systems with data sources through a single protocol. MCP helps the building of agents and complex workflows on top of LLMs.

One of the key features of MCP (well explained in this Medium article), is the fact “it is a broad protocol that standardizes the entire execution and interaction process between LLM applications and external systems. It not only handles the translation of user intent but also provides a structured framework for tool discovery, invocation, and response handling”.

The true innovation of what MCP brings to the table is the capacity to develop more sophisticated and autonomous AI agents allowing relevant up-to-date context to be integrated to the LLMs by eliminating the issue of "isolation of LLMs from real-world, up-to-date information" through enhancing access to real-time external knowledge.

Model-context protocols are, with no doubt, a solid step forward towards a broad use of agentic AI.

The missing piece of the puzzle? For many of these things to happen we need to find a standardized way of accessing scattered data that can provide the required context for better decision making. It's for this reason; the recent protocol development is so important.

The magic happens when merging these frameworks, extending the benefits of secure and standardized data sharing beyond the financial domain, encompassing various types of data - sometime private - empowering both individuals and organizations with the execution capabilities agentic AI brings.

But first, let us rewind the clock and see how we got here.

From Web 1.0 to Web 3.0

In the initial era of Web 1.0, people and organizations shared mostly static webpage hosted information that was basically links to other sources on the web. We all gained access to information once hard to get or find. The amazing gains from Web 1.0 lacked capacity for interaction and therefore the value of growth in applications Web 2.0 brought. Connecting people became the object, and the growth, of this second era of the web.

Yet this interconnected revolution came with a price. The interconnected world that was brought to life via Web 2.0 came with certain challenges. Users lacked agency, interoperability was not an option, and lack of balanced data governance, surfaced, favouring platforms where user information was stored.

Web 3.0 arrives as the answer, with the mission to make internet data, machine readable, thus solving some of Web 2.0 issues. How? By decoupling data, applications, and identities, allowing the interaction between them to be interoperable through the creation of standards.

Long before the current possibilities we are seeing today, the ambitions of Web 3.0 were laid out in 1999. Tim Berners-Lee, father of the world wide web, dram of a “Semantic Web” in which computers “become capable of analysing the data on the Web - content, links, and transactions between people and computers” in a world where “the day-to-day mechanisms of trade, bureaucracy and our daily lives will be handled by machines talking to machines. The ‘intelligent agents’ people have touted for ages will finally materialize".

One important clarification: Let's not confuse Web 3.0 with Web3, a term coined by Gavin Wood, Ethereum co-founder back in 2014, defined as a "decentralized online ecosystem based on blockchain". The University of Cambridge researchers call it "the putative next generation of the web's technical, legal, and payments infrastructure—including blockchain, smart contracts and cryptocurrencies.", equating it to the tooling required for agentic AI to perform tasks in a secure, interoperable, and transparent way.

Fast forward to today, these intelligent agents seem a concept not foreign but real.

Back in 1999, computational capacity, scientific understanding and costs made it almost impossible to have agents that could be autonomous, and capable of taking action, while at the same time learn and refine their performance.

Now, the game has changed. Connecting this process with agentic AI means the concept of intelligent agents is taken to the next level, where they "proactively pursue goals, make decisions, and take actions over extended periods (of time), thereby exemplifying a novel form of digital agency".

For all of this to become a reality, data needs to flow in a secure permissioned and transparent way. A way, where accountability can be traced and agency is present at every stage. For this to happen we must have the right set-up in terms of rules and protocols.

For this new world, a clear set of protocols and standards need to be developed in order to guarantee the benefits of what the world of data and decentralisation can offer. To reap the benefits of interconnectedness at scale, interoperability is needed giving consumers the full value of data in the network economy.

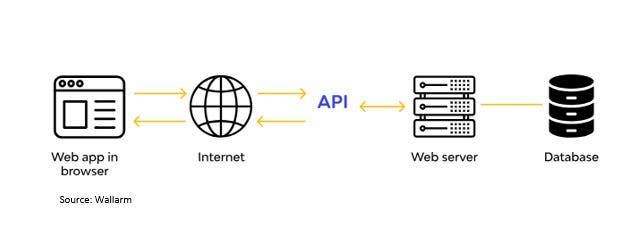

APIs, the tools and rules that allow software applications to communicate and share data or functionality with each other have come to enable the Command and Own; giving value to data, the new gold.

Tokens? The means of transport for data and values (fiat, stablecoins or crypto) in all its forms (the essential units of data).

But this is just the beginning of this new era of agents and autonomy. Sooner, rather than later, we will start to see how consumers can be enhanced, assisted by Agentic AI. Where commands will lead to execution of tasks making easier and frictionless the own. We will see how the burden of the ordinary day to day task execution process, that comes with decision making, that often leads to procrastination and inaction, evaporates. An agent will take command of our actions through a permissioned approach.

Imagine the possibilities: Procrastinating inaction, becomes a thing of the past.

We will see Agents assisting and executing tasks on our behalf (selecting and buying plane tickets, hotel bookings, restaurant reservations; deciding on the best option from a series of investment funds; choosing the best mortgage according to your needs; organizing personal and family finances, and many more). All of these tasks, entailing positive outcomes for individuals and organizations, having the ability to generating financial health and wellbeing if done at the right time, when needed.

But there's a catch. For all of this to happen in a secure, efficient way, a sound framework needs to be developed in order to build necessary trust. Interestingly, there are playbooks already out there we can leverage, allowing the needed trust, for data to flow in a secure, permissioned way.

What is this thing about Open Banking, Finance and Data?

So, what is these things called open banking, open finance, and open data?

A journey that began decades ago with the initial dial-up services of the 80’, catapulted by the 2007 European Payment Service Directive that transformed the early vision into a set of rules, standards and systems for a secure, permission and trusted framework for sharing of personal or corporate banking information with third parties, such as other banks, financial institutions, and fintechs.

A new data economy was created by bringing new, and existing products and services, to underserved communities and those consumers that had never been served. Suddenly, footprints (digital) created access. They became a path toward inclusion.

Enter the game-changer: APIs. This paradigm shift required a data intermediary allowing different systems, or computer programs, to communicate with each other in a permissioned way. The result? A doorway to the realm of open data where the richness of data, can be used as context for the design of better, timely and more efficient products and services through a permissioned approach.

Now comes the AI evolution. We are moving into a new world where the possibilities of making data valuable for consumers can be augmented by AI. Large language models (LLMs) have brought context into action providing capabilities for agentic AI expanding what artificial intelligence can do for consumers, both individuals and organizations.

What is Agentic AI

According to a Citi GPS report, Agentic AI is "an artificial intelligence that can make autonomous decisions without human intervention". The same report states "this paradigm shift is powered by a combination of technological breakthroughs in contextual understanding, memory, and multi-tasking abilities".

With great power comes responsibility. While offering efficiency and innovation, the World Economic Forum (WEF) considers agentic AI raises some concerns that need to be taken care of, one of them being governance. It is for this precise reason, developing robust oversight and frameworks is necessary for this forward leap to benefit the many, despite the challenges that may arise.

Capitalizing on the capabilities provided by open banking (as well as open finance and open data) architecture, consumers will be empowered to make better decisions with less friction in a secure and timely manner. If done right, we can speed into a new era where individuals will be powered by bots (agents,) capable of performing diverse tasks (e.g. selecting products to invest-in, deciding on loan or insurance providers, plan personal or business finances), and at the same time executing transactions.

Time is the ultimate currency. As the saying goes, time is money, and time is of outmost importance when talking about financial services. How often have you delayed an important financial decision due to the fact you do not want to start the burdensome process it requires? How much has it cost you? Imagine deciding on the best option assisted by an agent (AI) that understands your needs and finances, that can clearly and transparently put in front of you the best available alternative aligned with your circumstances (context). Once the most appropriate option is selected, your agent commands and actions the process with not friction and minimal effort. Your purchased asset or service will be a click away without the hassle it normally entails. Less hassle, less cost, more transparency, with your AI agent doing the heavy lifting behind the scene. The benefits are clear, yet the biggest challenges is making it accessible to the masses, in a world where resources, education and technology are not evenly distributed.

But there's still a missing link. What seems missing is a framework that brings clarity and transparency to data access through a consent-based approach. Understanding open finance and open data ecosystem developments including the standards used, can help architect a robust framework for the safe usage of AI agents by consumers and organizations through a permissioned approach.

The combined forces of Open Finance and Open Data with Agentic AI

Now imagine an individual powered by its agent, through a permissioned approach, executing instructions (commands) on the individual's or corporate’s behalf.

Picture this scenario: An agent, properly identified, through consent-led protocols, having the capacity to access the necessary data providing it with real-world up-to-date information, robust enough to perform a required task that can signify read, write, command and own as needed.

For this to work in a seamless, secure, way, proper frameworks, accountability, and standards must be developed. Whenever interacting with an agent, you need to know who the agent is, on what capacity, who are they are acting on behalf of; who the counterparty is (the consumer or corporation). Having a framework for identifying those counterparties is essential.

Errors occur. The building blocks for this to happen in a secure and trusted way are mechanisms where you can identify the counterpart (whom the agent is and who is it acting on behalf of), and whether certain rules and principles are followed. Whether regulation has been taken into account and on what capacity is the agent acting.

The agent is an extension of the individual and corporation, it is not siloed. All its actions have consequences and therefore rules and regulations must be followed.

The Framework: Learning from Open Finance and Open Data

At the centre of an open finance, open data architecture lies rules, governance, processes, and technology, commonly known as the trust framework. Tooling equipped for safe and secure access to permissioned based customer data; a framework fundamental for building trust.

Trust requires standards. Adequate definitions around rules and standards are key for all participants. By levelling the playing field, we bring trust through communication mechanisms that share common principles reducing friction and generating cost-efficiencies. Rules and standards are key when thinking about scale and interoperability, particularly in environments where size and types of participants varies widely.

A robust trust framework allows the creation of proper guardrails for an interconnected world. By using previously developed principles that helped shape open finance and open data ecosystems, we can architect the necessary requirement for a world where AI agents act on behalf of consumers in a secure, permissioned way.

We're not starting from scratch. The starting point is encouraging. The robust open finance and open data frameworks that have been developed in multiple jurisdictions have coped well with the growing cybersecurity issues a growingly connected world has brought. "Agentic AI's reliance on vast amounts of data raises privacy concerns. Balancing personalization with privacy is essential, while its autonomy introduces new cybersecurity risks" (WEF, How agentic AI will transform financial services with autonomy, efficiency and inclusion, December 2, 2024). Applying the learnings from the world of open finance and open data can help shape a more secure world of Agentic AI.

Data is the new gold. Data is an asset that, through context, can generate value... It's a form of gold.

The initial idea behind empowering consumers by allowing them to take back control of their personal data (digital footprints), was to empower them with the right to use and extract value from what was rightfully theirs. The next phase of this process is turning all this data trails into a valuable asset giving meaning to Nandan Nilekani, one of the architects of the India Stack, words, when he says people will be data rich before being economically rich given the wealth of information that can be collected from consumption and usage patterns across platforms, entities, and commerce.

By aggregating our data and giving it context, becomes a great source of value (a broader offering, a timelier manner, better price point, or in a more convenient way).

If you want to go fast and far, go together. Navigating the requirements to access our data directly could take ages. Along came third party data providers who can access this information, in a timely, efficient way, by leveraging open finance capabilities. By providing context on consumer behaviour and circumstances, aggregated data allows for more tailored offering. Suddenly, the principles behind open banking development surface.

Consent became the cornerstone. For this permissioned access approach, consent protocols were developed capturing the essence of data protection regulation (e.g. GDPR, PSD2, etc.). They became key pillars of the open banking revolution creating a framework for secure permissioned access to consumers' data by third party data providers.

Consent flows in an era of AI agents

Developing robust consent flows and how these consents can be exercised by an AI agent on behalf of a consumer, through proper verification, is fundamental. Not only will the consumer need to identify themselves, there needs to be a way of identifying the AI agent acting on behalf of the consumer at all times.

This requires rethinking consent. Adding an AI agent would require including their authorization to act on behalf of an individual or corporation to be part of the consent flow.

In the world of open banking a user initiates a consent request to action a financial service to be used (payment initiation, account aggregation, etc.) via a third-party provider (TPP) through which the user is authenticated using SCA (Strong Customer Authentication). After authentication is successful, the financial service provider generates a one-time access code that is exchanged for an access token using an OAuth authorization which is a standard method for allowing a third-party application to securely access a user's data on another service. With this token, the TPP can access the data required for the service to be provided to the user.

Identification in a world of AI agents. One of the challenges agentic AI will face is security. We can leverage the learnings of the NFT world to create unique identifiers for individuals or companies using AI agents. Each individual or corporation using an agent will be issued its unique non-fungible representation, a unique identifier that will be associated to every command coded with the granted consents assigned to the specific task the agent will action. Identity needs to be embedded into the workflows preventing rogue bots from impersonating individuals and corporations.

How do we build trust in Agentic AI by using Open Finance and Open Data frameworks

Trust comes with process and transparency. For consumers to trust AI agents they need to feel they can rely on the process, that it is transparent and that there is traceability which in turn provides them security. For this to happen a framework needs to be developed for a secure, permissioned, and standardized approach where an AI agent can execute read, write, and own tasks on behalf of an individual or company, ensuring through proper consents, security protocols, and regulatory compliance:

Framework for Secure Permissioned AI Agents

1. Consent Management

• Explicit Consent: Implement mechanisms to obtain explicit consent from users before any data access or task execution. This includes clear, understandable consent forms and options for users to manage their consents.

• Granular Permissions: Allow users to grant specific permissions for different types of data and tasks, ensuring they have control over what the AI agent can access and do.

• Revocation and Audit: Provide users with the ability to revoke consent at any time and maintain an audit trail of all consent transactions.

2. Standardized APIs

• Unified API: Develop a unified API that combines the capabilities of MCP and Open Banking standards, supporting secure data connections, standardized data formats, and interoperability across different domains.

• Interoperability: Ensure the API is compatible with various data sources and systems, facilitating seamless integration and data exchange.

3. Security Protocols

• Encryption: Use strong encryption methods for data in transit and at rest to protect sensitive information.

• Authentication and Authorization: Implement robust authentication and authorization mechanisms, including multi-factor authentication and role-based access controls.

• Adversarial Testing: Conduct regular adversarial testing to identify and mitigate potential security vulnerabilities.

4. Regulatory Compliance

• Data Protection Regulations: Ensure compliance with relevant data protection regulations such as GDPR, CCPA, and other local laws.

• Financial Services Regulation: Ensure adherence to risk management guidelines, front office procedures, cybersecurity policies, KYC procedures, customer identification programs, monitoring, as well as other international and local rules and regulations.

• Transparency and Accountability: Maintain transparency about how data is used and shared, and establish accountability mechanisms for AI agent actions.

• Ethical Guidelines: Adhere to ethical guidelines for AI development and deployment, ensuring fairness, non-discrimination, and respect for user privacy.

5. Consumer Empowerment and Transparency

• User Control: Provide users with clear options for data sharing and usage, allowing them to manage their data and permissions easily.

• Transparency: Ensure that users are informed about how their data is being used and the actions taken by the AI agent on their behalf.

6. Identification and Verification

• Agent Identification: Implement mechanisms to identify AI agents acting on behalf of users, ensuring that actions are traceable and verifiable.

• Verification Processes: Establish verification processes to confirm that AI agents are acting with proper consent and within the scope of granted permissions.

7. Pilot Projects and Use Cases

• Domain-Specific Pilots: Start with pilot projects in specific domains such as finance, healthcare, or retail to test and refine the framework.

• Real-World Scenarios: Use real-world scenarios to identify challenges and opportunities for improvement, ensuring the framework is robust and effective.

Potential Benefits

• Enhanced AI Capabilities: AI agents can leverage real-time, context-aware data to provide more accurate and relevant responses.

• Consumer Empowerment: Users gain greater control over their data, leading to more personalized and beneficial services.

• Innovation and Competition: A universal standard can drive innovation and competition, leading to better products and services across various industries.

By following the steps of this Universal Data Access Framework (UDAF), a secure, permissioned, standardized approach is developed allowing AI agents to act on behalf of individuals or companies abiding by the use of proper consents, strict security protocols, and strong regulatory compliance.

Conclusion

UDAF leverages the strengths of Open Banking and Open Finance consumer consent, privacy, and regulatory compliance developed frameworks, incorporating MCP's capabilities for seamless integration and interoperability (or other AI protocols that may arise).

This approach not only enhances the functionality of AI agents, it ensures that they operate within predefined consent-based approaches to accessing data. Consumers, whether individuals or organizations, can benefit from AI agents that act on their behalf, performing tasks with precision and efficiency, while maintaining control and traceability.

The framework aims to foster innovation and competition across multiple sectors, driving the development of new products and services that cater to the evolving needs of users.

The future is within reach. By adopting this unified framework, we build towards addressing the challenges of data privacy, security, and interoperability in the age of AI. The integration of Open Banking and Open Finance frameworks with MCP provides an un-siloed solution that keeps consumers with control over their data, while benefiting from augmentation AI brings to personalized and context-aware services. This thesis explores the potential of this integration, highlighting the benefits and opportunities it presents for creating a secure, permissioned, and standardized approach to data access across various domains.

As we move towards a more interconnected and data-driven world, this unified framework promises to be a pivotal step in harnessing the full potential of AI and data sharing for the benefit of all stakeholders.

This is a great read. I cant help but think how this can lead to improved financial wellness with fiduciary responsibilities.

I am much less interested in Agentic AI to improve our shopping experiences. I am much more interested in Agentic AI towards an optimized financial planning and wellness (budgeting is a very small part of this).